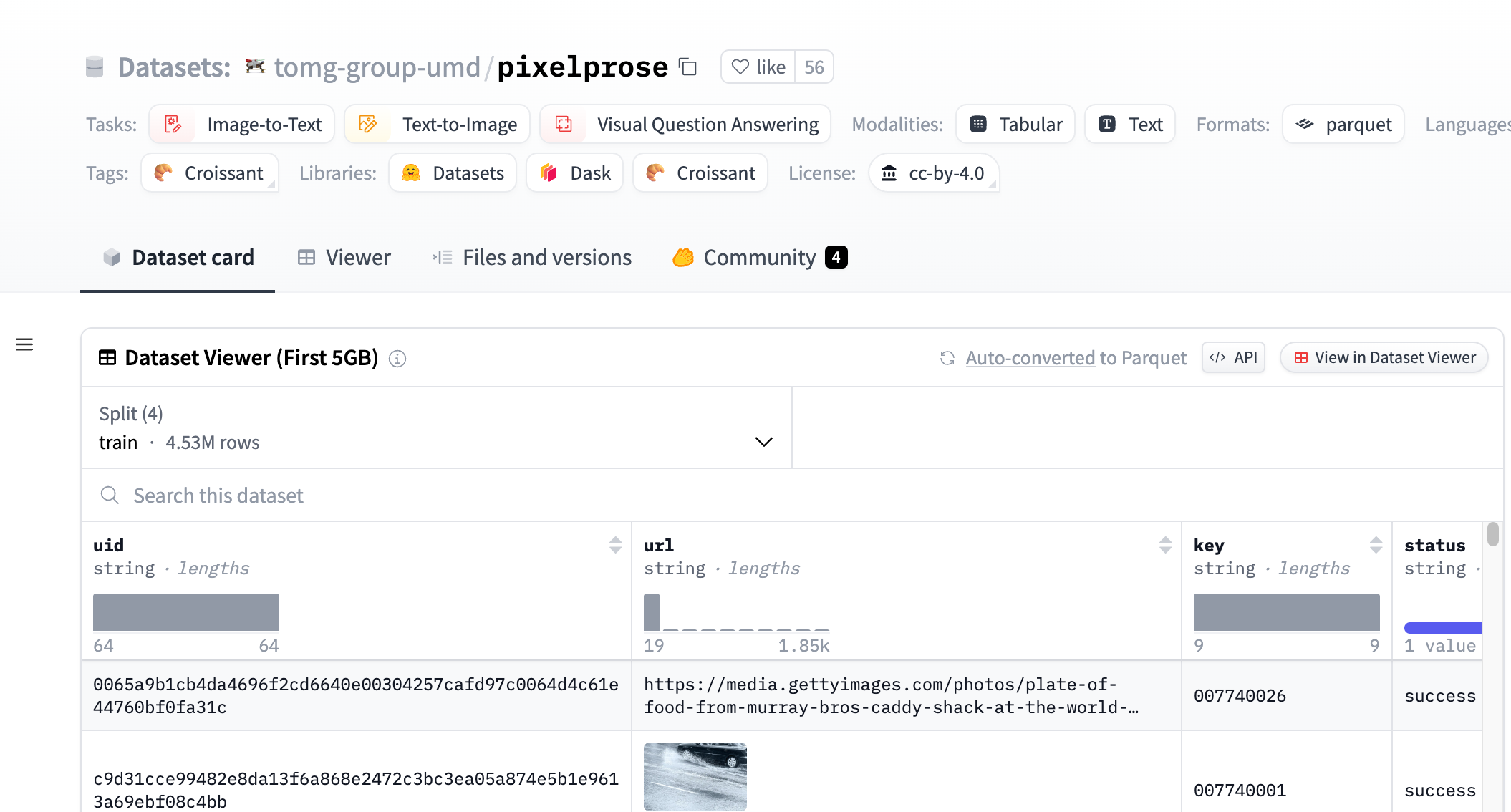

PixelProse

Large-scale image description dataset, providing composite image descriptions over 16M.

Tags:AI image toolsAI Image GeneratorPreview:

Introduce:

PixelProse is a large-scale dataset created by tomg-group-umd that has generated more than 16 million detailed image descriptions using Gemini 1.0 Pro Vision, an advanced visual-language model. This dataset is of great significance for developing and improving image-to-text conversion techniques, which can be used for image description generation, visual question answering and other tasks.

https://pic.chinaz.com/ai/2024/06/24062003165237642503.jpg

Stakeholders:

The target audience is researchers and developers in the field of machine learning and artificial intelligence, especially those who specialize in image recognition, image description generation, and visual question answering systems. The size and diversity of this dataset makes it an ideal resource for training and testing these systems.

Usage Scenario Examples:

- The researchers used the PixelProse dataset to train an image description generation model to automatically generate descriptions for images on social media.

- The developers used this dataset to create a visual question-and-answer app that answers users’ questions about the content of the images.

- Educational institutions use PixelProse as a teaching resource to help students understand the fundamentals of image recognition and natural language processing.

The features of the tool:

- Provides over 16M image-text pairing.

- Supports multiple tasks such as image to text and text to image.

- Contains a variety of modes, including tables and text.

- The data is in liarquet format for easy processing by machine learning models.

- Contains detailed image descriptions for training complex visual-language models.

- The dataset is divided into three parts: CommonPool, CC12M and RedCalis.

- Provide image EXIF information and SHA256 hash value to ensure data integrity.

Steps for Use:

- Step 1: Visit the Hugging Face website and search the PixelProse dataset.

- Step 2: Choose the appropriate download method, such as Git LFS, Huggingface API or direct link to download the liarquet file.

- Step 3: Download the appropriate image using the URL in the liarquet file.

- Step 4: According to research or development needs, load the data set and preprocess it.

- Step 5: Train or test the visual-language model using datasets.

- Step 6: Evaluate model performance and adjust model parameters as needed.

- Step 7: Apply the trained model to a practical problem or further research.

Tool’s Tabs: Image description, visual-language model