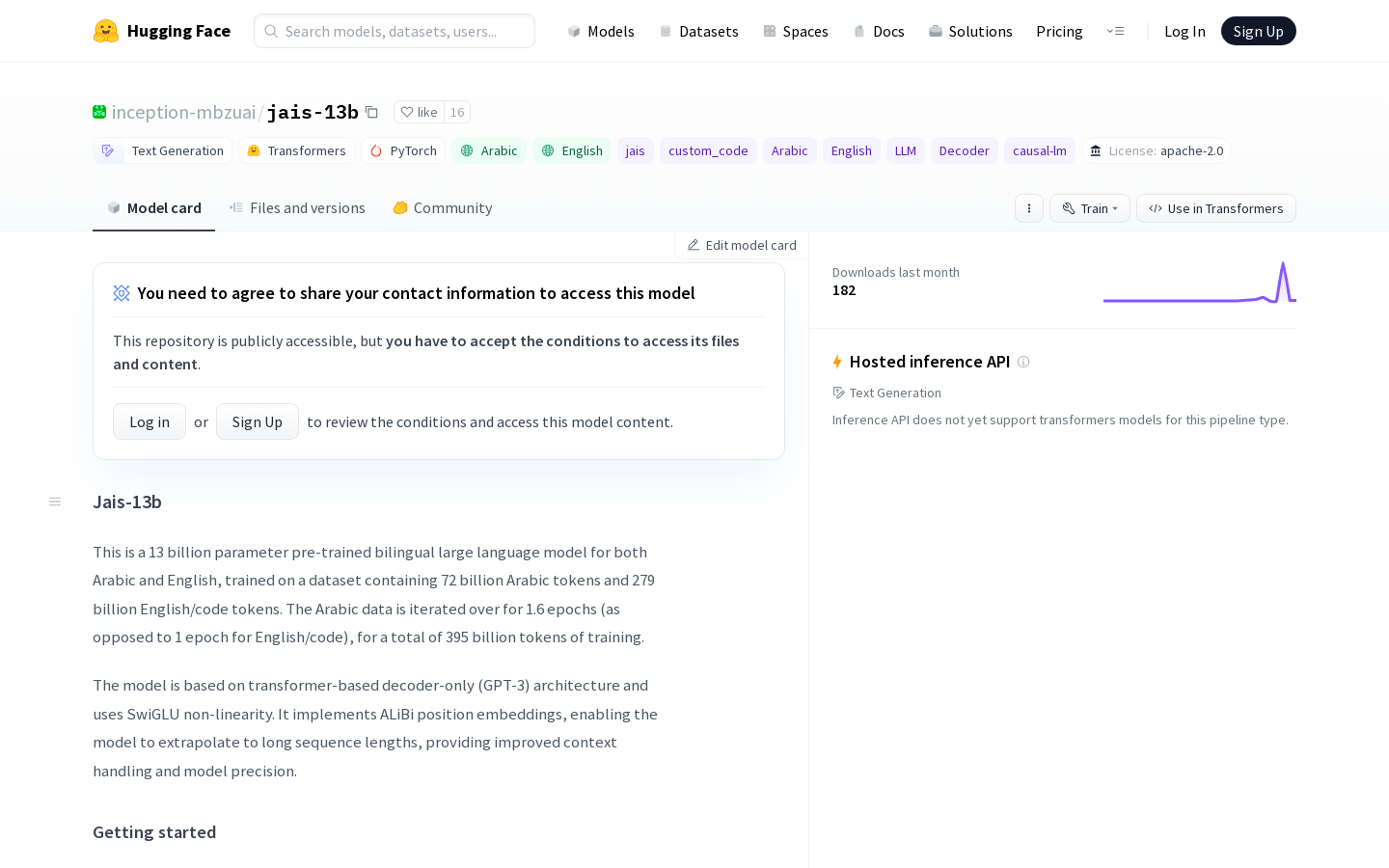

This is a pre-trained large-scale bilingual language model with 130 billion parameters, supporting Arabic and English, trained on a dataset of 720 billion Arabic tokens and 279 billion English/code tokens. The Arabic data underwent 1.6 epochs (compared to 1 epoch for English/code), totaling 395 billion tokens trained. The model is based on the Transformer decoder-specific architecture (GPT-3) using the SwiGLU non-linear activation function. It implements ALiBi positional embeddings that can extrapolate to long sequence lengths, providing improved context handling and model accuracy.

Target Audience:

["Research purposes", "Commercial purposes such as chatbots, customer service, etc."]

Example Use Cases:

- Used as a foundational model for Arabic natural language processing research

- Developing applications integrated with Arabic functionalities

- Fine-tuning for downstream tasks like chatbots

Tool Features:

- Supports generative dialogues in Arabic and English

- Can be fine-tuned for specific downstream tasks

- Provides context-aware capabilities

- Supports long sequence generation

Tool Tags: Chatbot, Intelligent Chat